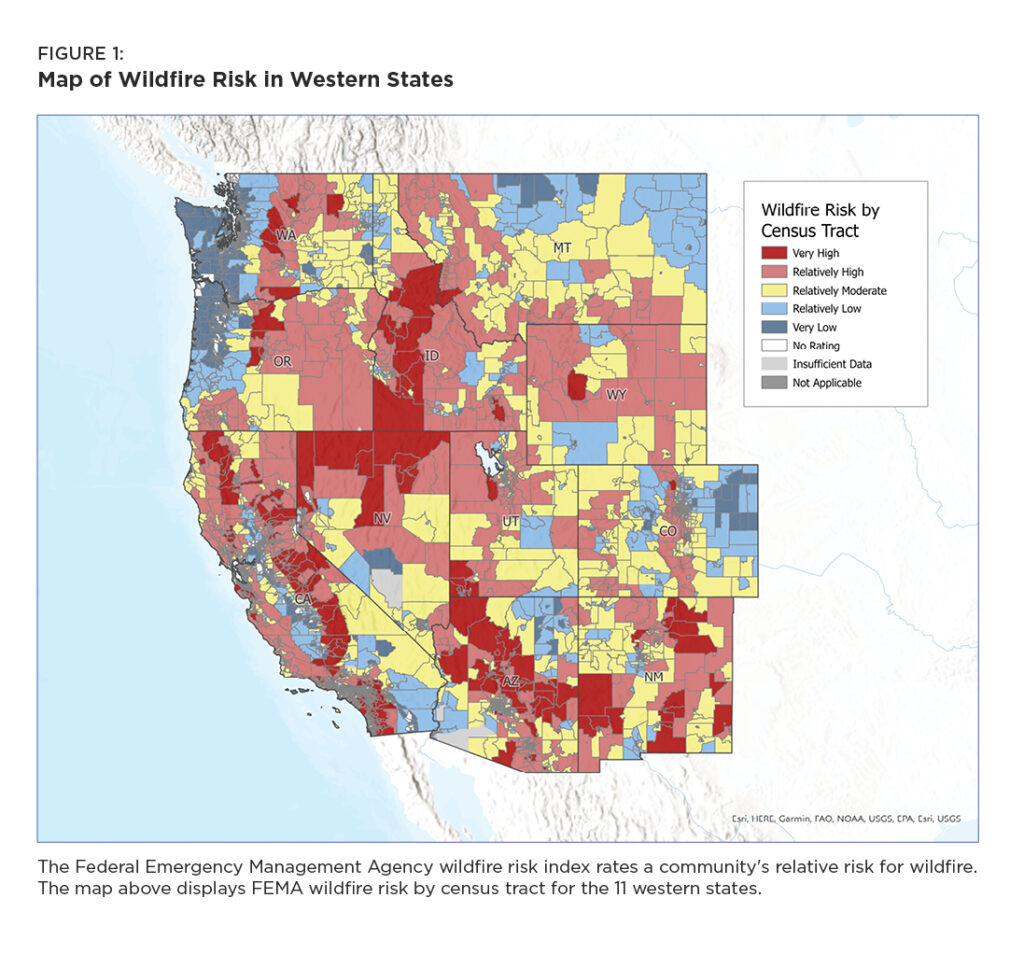

Montanans are no stranger to the growing wildfire problem. Today’s “megafires” scorch forests, degrade water quality, decimate habitat and choke the air with smoke. Since 2005, the United States has three times eclipsed 10 million acres burned by wildfires in a year—an unfathomable total just a few decades ago—with the vast majority of that acreage concentrated in the West. In Montana alone, more than 2.6 million acres burned between 2017 and 2021, and nearly nine million acres of forest land are at high or very high risk of wildfire. Nearly 100,000 structures have burned in wildfires since 2005, with two-thirds of that destruction occurring since 2017.

As with any large, complex phenomenon, no single factor explains the growing wildfire crisis. Past management decisions led to a dangerous accumulation of dead and diseased trees, small trees and shrubs and other fuels. A changing climate has lengthened the wildfire season, the period of year in which dry and hot conditions make it more likely a fire will ignite and spread. And development in the wildland-urban interface, the place where human development and wild areas meet, has increased the potential for human-caused ignitions.

The critical question is what’s to be done to tackle the wildfire crisis. Some of these factors require long-term policy and economic changes that will take decades to affect fire regimes. But as recent wildfires have shown, other factors can be addressed now, producing immediate benefits. One such factor is the use of prescribed fire, in which low-intensity fire is carefully applied to a landscape under controlled conditions to improve forest resilience, reduce extreme wildfire risks and achieve other land-management objectives. Time and again, when wildfires have spread to areas that have been intentionally managed with prescribed fire, those fires have become less destructive and easier to fight.